Introduction

Polymorphism through dynamic dispatch (a base class, plus multiple subclasses that each override a base class method) is the standard way to handle variation in object-oriented code. But it’s not the only choice, and in many cases it offers too much dynamism. The point of dynamic dispatch is to have the code select a branch based on runtime conditions. It is designed to allow the same code to trigger a different pathway each time it is run. If you don’t actually need this type of late (runtime) binding, then you have other options for dispatching earlier than runtime. The advantage of using early binding when it is appropriate is that the program correctly expresses constness: if something is the same on every execution, this should be reflected in the code. Doing a runtime check in such a situation is needlessly pushing a potential bug out from compile/static analysis time to runtime.

A good example is situations where you have multiple implementations of some library available. Maybe one implementation is available on one platform and another is available on another platform. Or, one is a different manufacturer’s implementation and you’re experimenting with adopting it but not yet sure it’s ready for adoption. In these situations, you have variation: your code should either call one implementation or another. But is this variation really happening at runtime? Or, is it happening at build time? Are you choosing, when you build your program, which implementation is going to be used, for every execution?

The Dynamic Dispatch Solution

The obvious, or I might say naive, way to handle this is with typical polymorphism. First I’ll create a package LibraryInterface that just contains the interface:

public interface LibraryFacade

{

void doSomething();

void doSomethingElse();

}Then I’ll make two packages. OldLibrary implements the interface with the implementation provided by the manufacturer CoolLibrariesInc. NewLibrary implements it with one provided by the manufacturer SweetLibrariesInc. Since these both reference the interface, both packages need to import LibraryInterface:

import LibraryInterface;

class OldLibraryImplementation : LibraryFacade

{

override void doSomething()

{

// Call CoolLibrariesInc stuff

}

override void doSomethingElse()

{

// Call CoolLibrariesInc stuff

}

private CoolLibrariesClass UnderlyingObject;

}

import LibraryInterface;

class NewLibraryImplementation : LibraryFacade

{

override void doSomething()

{

// Call SweetLibrariesInc library stuff

}

override void doSomethingElse()

{

// Call SweetLibrariesInc library stuff

}

SweetLibrariesClass UnderlyingObject;

}

Then, somewhere, we have a factory method that gives us the right implementation class. Naively we would put that in LibraryInterface:

public static class LibraryFactory

{

public static LibraryFacade library();

}But we would have to make it abstract. We can’t do that because it’s static (it wouldn’t make sense anyways). If we try to compile LibraryInterface with this, it will rightly complain that the factory method is missing its body. The most common way to deal with this I’ve seen (and done myself many times) is to move the factory method into the application that uses this library. Then I implement it according to which library I want to use:

import OldLibrary;

import NewLibrary;

public static class LibraryFactory

{

// We’re using the new library right now

public static LibraryFacade library()

{

return new NewLibraryImplementation();

// return new OldLibraryImplementation();

}

}Then in my application I use the library by calling the factory to get the implementation and call the methods via the interface:

LibraryFacade library = LibraryFactory.library();

library.doSomething();If I want to switch back to the old library, I don’t need to change any of the application code except for the factory method. Cool!

This is already a bit awkward. First of all, in order for the factory file to compile, we have to link in both the old and new libraries because of the import statements. Importing both doesn’t break anything. In fact, we have all the mechanisms needed to use both implementations in different parts of the app, or switch from one to the other mid-execution. Do we really need this capability? If the intention is that we pick one or the other at build time and stick with it, then wouldn’t it be ideal for the choice to be made simply by linking one library in, and better yet signal a build error if we try to link in both?

When It Starts to Smell

Let’s say our library interface is a little more sophisticated. Instead of just one class, we have two, and they interact with each other:

public interface LibraryStore

{

LibraryEntity FetchEntity(string Id);

…

void ProcessEntity(LibraryEntity Entity);

}

public interface LibraryEntity

{

string Id { get; }

}Now, we have the old library’s implementation of these entities, and presumably each one is wrapping some CoolLibrariesInc class:

internal class OldLibraryStore: LibraryStore

{

LibraryEntity FetchEntity(string Id)

{

CoolLibraryEntity UnderlyingEntity = UnderlyingStore.RetrieveEntityForId(Id);

return new OldLibraryEntity(UnderlyingEntity);

}

void ProcessEntity(LibraryEntity Entity)

{

if(Entity is not OldLibraryEntity OldEntity)

throw new Exception(“What are you doing!? You can’t mix library implementations!”);

CoolLibraryEntity UnderlyingEntity = OldEntity.UnderlyingEntity;

UnderlyingStore.DoWorkOnEntity(UnderlyingEntity);

}

…

private CoolLibraryStore UnderlyingStore;

}

internal class OldLibraryEntity: LibraryEntity

{

string Id => UnderlyingEntity.GetId();

…

internal CoolLibraryEntity UnderlyingEntity;

}Notice what we have to do in ProcessEntity. We have to take the abstract LibraryEntity passed in and downcast it to the one with the “matching” implementation. What do we do if the downcast fails? Well, the only sensible thing is to throw an exception. We certainly can’t proceed with the method otherwise, and it certainly indicates a programming error.

Now, if you know me, you know the first thing I think when I see an exception (other than “thank God it’s actually failing instead of sweeping a problem under the rug”) is “does this need to be a runtime failure, or can I make it a compile time failure?” The error is mixing implementations. Is this ever okay? No, it isn’t. That’s a programming error every time it happens, period. That means it’s an error as soon as I write the code that takes one implementation’s entity and passes it to another implementation’s store. I need to strengthen my type system to prevent me from doing this. Let’s make the Store interface parameterized by the Entity type it can work with:

public interface LibraryStore<Entity: LibraryEntity>

{

Entity fetchEntity(string id);

…

void processEntity(Entity entity);

}

Then the old implementation is:

public class OldLibraryStore: LibraryStore<OldLibraryEntity>

{

OldLibraryEntity FetchEntity(string Id)

{

CoolLibraryEntity UnderlyingEntity = UnderlyingStore.RetrieveEntityForId(Id);

return new OldLibraryEntity(UnderlyingEntity);

}

void ProcessEntity(OldLibraryEntity Entity)

{

CoolLibraryEntity UnderlyingEntity = Entity.UnderlyingEntity;

UnderlyingStore.DoWorkOnEntity(UnderlyingEntity);

}

…

private CoolLibraryStore UnderlyingStore;

}I need to make the implementing classes public now as well.

Awesome, we beefed that exception up into a type error. If I made that mistake somewhere, I’ll now get yelled at for trying to send a mere LibraryEntity (or even worse a OldLibraryEntity) to the NewLibraryStore. I’ll probably need to adjust my client code to keep track of the fact it’s now getting an NewLibraryEntity back from FetchEntity instead of just a LibraryEntity. Well… actually, I’ll first get yelled at for declaring a LibraryStore without specifying the entity type. First I have to change LibraryStore to LibraryStore<NewLibraryEntity> . And if I do that, I might as well just change them to NewLibraryStore.

Okay, but my original intention was to decouple my client code from which library implementation I have chosen. I just destroyed that. I have now forced the users of LibraryStore to care about which LibraryEntity they’re talking to, and by extension which LibraryStore.

Remember, we have designed a system that allows me to choose different implementations throughout my application code. I can’t mix them, but I can switch between them. If that’s what I need, then it is normal and expected that I force the application code to explicitly decide, at each use of the library, which one it’s going to use. Well, that sucks, at least if my goal was to make selecting one (for the entire application) a one-line change. This added “complexity” of the design correctly expresses the complexity of the problem.

The problem with this problem is it isn’t actually my problem.

(I’m really proud of that sentence)

I don’t need to be able to pick one implementation sometimes and another other times. I don’t need all of this complexity! What I need is a build-time selection of an implementation for my entire application. That precludes the possibility of accidentally mixing implementations. That simply can’t happen if the decision is made client-wide at build time. My design needs to reflect that.

An Alternative Approach

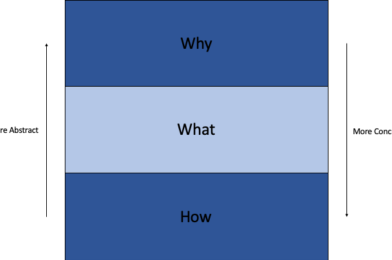

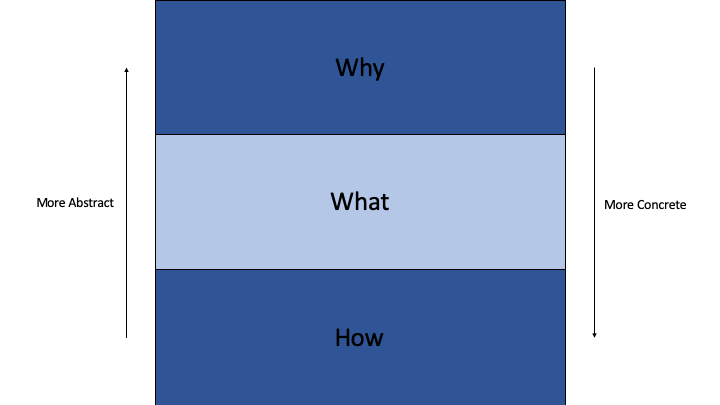

The fundamental source of frustration is that I chose the wrong tool for this job. Dynamic dispatch? I don’t need or want the dispatch to be dynamic. That means doing the implementation “selection” as implementations of an interface is the wrong choice. I don’t want the decision of where to bind a library call to be made at the moment that call is executed. I want it to be bound at the moment my application is built.

Let’s try something else. I’m going to get rid of the LibraryInterface package altogether. Then OldLibrary will contain these classes:

public class LibraryStore

{

LibraryEntity FetchEntity(string Id)

{

CoolLibraryEntity UnderlyingEntity = UnderlyingStore.RetrieveEntityForId(Id);

return new LibraryEntity(UnderlyingEntity);

}

void ProcessEntity(LibraryEntity Entity)

{

CoolLibraryEntity UnderlyingEntity = Entity.UnderlyingEntity;

UnderlyingStore.DoWorkOnEntity(UnderlyingEntity);

}

…

private CoolLibraryStore UnderlyingStore;

}

public class LibraryEntity

{

string Id => UnderlyingEntity.GetId();

…

internal CoolLibraryEntity UnderlyingEntity;

}NewLibrary will contain these classes:

public class LibraryStore

{

LibraryEntity FetchEntity(string Id)

{

SweetLibraryEntity UnderlyingEntity = UnderlyingStore.ReadEntity(Id);

return new LibraryEntity(UnderlyingEntity);

}

void ProcessEntity(LibraryEntity Entity)

{

SweetLibraryEntity UnderlyingEntity = Entity.UnderlyingEntity;

UnderlyingStore.ApplyProcessingToEntity(UnderlyingEntity);

}

…

private SweetLibraryStore UnderlyingStore;

}

public class LibraryEntity

{

string Id => UnderlyingEntity.UniqueId;

…

internal SweetLibraryEntity UnderlyingEntity;

}I’ve totally gotten rid of the class hierarchies. There are simply two versions of each class, with each equivalent one named exactly the same. That means they’ll clash if they are both included in a program. That’s perfect, because if we accidentally link both libraries, we’ll get duplicate definition errors.

It took me a long time to realize this is even an option. This is a “weird” way to solve the problem. For one, the actual definition of the “interface” doesn’t exist anywhere. It is, in a way, duplicated and stuffed into each package. And, to be sure, there is an interface. An “interface” just means any standard for how code is called. In OO code, it means the public methods and members of classes. If we want to be able to switch implementations of a library without changing client code, a well-defined “interface” for the libraries is precisely what we need. So it’s natural we might think, “well if I need an interface, then I’m going to create what the language calls an interface!” But “interface” in languages like C# and Java (and the equivalent “Protocol” in ObjC/Swift) doesn’t just mean “a definition for how to talk to something”. It also signs you up for a specific way of binding calls. It signs you up for late binding, or dynamic dispatch.

Defining a Build-Time Interface

The fact there is no explicit definition of the library interface isn’t just inconvenient. We lose any kind of validation that we’ve correctly implemented the interface. We could make a typo in one of the methods and it will compile just file, and it won’t be until we switch to that implementation and try to compile our app. And the error we get won’t really tell us what happened. It will tell us we’re trying to call a method that doesn’t exist, but it should really tell us the library class is supposed to have this method but it doesn’t.

This is the general problem with “duck typing“. If I pass a wrench to a method expecting a duck, or duck-like entity, instead of getting a sensible error like “you can’t pass this object here because it isn’t duck-like”, you get a wrench complaining that it doesn’t know how to quack. You have to reason backward to determine why a wrench was asked to quack in the first place. Also, this error is fundamentally undetectable until the quack message is sent, which means runtime, even though the error exists from the moment I passed a wrench to a method that declares it needs something duck-like. The point of duck typing is flexibility. But flexibility is equivalent to late error detection. That’s why too much flexibility isn’t helpful.

One way to solve this is to “define” the interface with tests. What we’re testing is the type system, which means we don’t need to actually run anything. We just need to compile something. So we can write a test that simply tries to call each expected method with the expected parameters:

// There’s no [Test] annotation here!

void testLibraryStore()

{

var Entity = (LibraryEntity)null!;

string TestId = Entity.Id;

}

// There’s no [Test] annotation here!

void testLibraryStore()

{

var Store = (LibraryStore)null!;

Entity TestFetchEntity = Store.FetchEntity((string)null!);

ProcessEntity((Entity)null!);

} You might think I’m crazy for typing that out, but really it isn’t that ridiculous, and it does exactly what we want (it also indicates I’m truly a C++ dev at heart). I’ve highlighted that there are no [Test] annotations, which means this code won’t run when we hit the Test button. That’s good, because it will crash. We don’t want it to run. We just want it to compile. If that compiles, it proves our classes fulfill the intended interfaces. If it doesn’t compile, then we’re missing something.

(If you aren’t already interpreting compilation errors as failed tests, it’s never too late to start)

What would be nice is if languages could give us a way to define an interface that isn’t dynamically dispatched. What if I could do this:

interface ILibraryStore<Entity: ILibraryEntity>

{

Entity FetchEntity(string Id);

void ProcessEntity(Entity Entity);

}

interface ILibraryEntity

{

string Id { get; }

}I could put these in a separate LibraryInterface package. Then in one library package:

public class LibraryStore: static ILibraryStore<LibraryEntity>

{

LibraryEntity FetchEntity(string Id)

{

SweetLibraryEntity UnderlyingEntity = UnderlyingStore.ReadEntity(Id);

return new LibraryEntity(UnderlyingEntity);

}

void ProcessEntity(LibraryEntity Entity)

{

SweetLibraryEntity UnderlyingEntity = Entity.UnderlyingEntity;

UnderlyingStore.ApplyProcessingToEntity(UnderlyingEntity);

}

…

private SweetLibraryStore UnderlyingStore;

}

public class LibraryEntity: static ILibraryEntity

{

string Id => UnderlyingEntity.UniqueId;

…

internal SweetLibraryEntity UnderlyingEntity;

}By statically implementing the interface, I’m only creating a compile-time “contract” that I need to fulfill. If I forget one of the methods, or misname one, I’ll get a compiler error saying I didn’t implement the interface. That’s it. But then if I have a method like this:

void DoStuff(ILibraryEntity Entity)

{

string Id = Entity.Id; // What does the compiler do here?

}I can’t pass a LibraryEntity in. I can only bind a variable of type ILibraryEntity to an instance of a class if that class non-statically implements ILibraryEntity, because such binding (up-casting) is a form of type erasure: I’m telling the compiler to forget exactly which subtype the variable is, to allow code to work with any subtype. For that to work, the methods on that type have to be dispatched dynamically. The decision by language designers to equate “interfaces” with dynamic dispatch was quite reasonable!

That means in my client code I still have to declare things as LibraryStore and LibraryEntity. In order to get the “build-time selection” I want, I still have to name the classes identically. That is a signal to the compiler both that they cannot coexist in a linked product, and that they get automatically selected by choosing one to link in. Then, there’s the problem with importing. Since the packages are named differently, I’d have to change the import on every file that uses the library (until C# 10!). Same with Java. In fact, it’s a bit worse in Java. Java equivocates namespaces with packages, so if the packages are named differently the classes must also be named differently, and they’ll coexist just fine in the application. In either case, you can name the packages identically. Then your build system will really throw a fit if you try to bring both of them in.

Is the notion of a “static” interface a pipe dream? Not at all. C++20 introduced essentially this very thing and called them concepts. Creating these compile-time only contracts is a much bigger deal for C++ than for other languages, because of templates. In a language like C#, if I want to define a generic function that prints an array, I need an interface for stringifying something:

interface StringDescribable

{

string Description { get; }

}

string DescribeArray<T: StringDescribable>(T[] Array)

{

var Descriptions = Array

.Select(StringDescribable.Description);

return $”[{string.Joined(“, “, Descriptions)}]”;

}This requires the Description method to be dynamically dispatched because of how generics work. This code is compiled once, so the same code is executed each time I call this function, even with different generic types. It therefore needs to dynamically dispatch the Description call to ensure it calls T’s implementation of it. Fundamentally, a different Description implementation can get called each time this function is executed. It’s a runtime variation, so it has to be late-bound.

The equivalent code with templates in C++ looks like this:

template<typename T> std::string describeArray(T[] array, size_t count)

{

std::string descriptions[count];

std::transform(

array.begin(),

array.end(),

descriptions,

[] (const T& element) { return element.description(); }

);

return StringUtils::joined(“, “, descriptions); // Some helper method we wrote to join strings

}Notice the absence of any “constraint” on the template parameter T. No such notion existed in C++ until C++20. You don’t need to require that T implement some interface, because for each type T with which we instantiate this function, we get a totally separate compiled function. If our code does this:

SomeType someTypeArray[10];

SomeOtherType someOtherTypeArray[20];

…

describeArray(someTypeArray);

describeArray(someOtherTypeArray);Then the compiler creates and compiles the following two functions:

std::string describeArray(SomeType[] array, size_t count)

{

std::string descriptions[count];

std::transform(

array.begin(),

array.end(),

descriptions,

[] (const SomeType& element) { return element.description(); }

}

return StringUtils::joined(“, “, descriptions);

}

std::string describeArray(SomeOtherType[] array, size_t count)

{

std::string descriptions[count];

std::transform(

array.begin(),

array.end(),

descriptions,

[] (const SomeOtherType& element) { return element.description(); }

}

return StringUtils::joined(“, “, descriptions);

}These are totally different. They are completely unrelated code paths as far as the compiler/linker is concerned. We could even specialize the template and write completely different code for one of the cases.

Both of these will compile fine as long as both SomeType and SomeOtherType have a const method called description that returns a std::string (it doesn’t even need to return std::string, it can return anything that is implicitly convertible to a std::string). This is literal duck typing in action. Furthermore, declaring an “interface”, which in C++ is a class with only pure virtual methods, forces description to be dynamically dispatched, which forces every class implementing it to add a vtable entry for it. If any such class has no virtual methods except this one, we suddenly have to create a vtable for those classes and add the vpointer to the instances, which changes their memory layout. We probably don’t want that.

If I accidentally invoke describeArray with some class that doesn’t provide such a function, I get a very similar problem as our example with libraries. It (correctly) fails to compile, but the error message we get is, uh… less than ideal. It just tells us that it tried to call a method that doesn’t exist. Any seasoned C++ dev knows that when you have template code several layers deep (and most STL stuff is many, many layers deep), mistakenly instantiating a template with a type that doesn’t fulfill whatever “contracts” the template needs results in some crazy error deep in the implementation guts. You literally have to debug your code’s compilation (walk up a veritable stack trace of template instantiations) to find out what went wrong and why. It sucks. It’s the metaprogramming version of having terrible exception diagnostics and being reduced to looking at stack traces.

This even suffers a form of late error detection. Let’s say I write one template method where the template parameter T has no requirement to be “describable”, but in it I call describeArray with an array of T. This is an error that should be detected as soon as I compile after writing this method. But it won’t be detected until later, when I actually instantiate this template method with a non-describable parameter. It’s a compile-time error, but it’s still too late, and still in a sense detected when something is executed instead of when it is written (C++ templates are a metaprogramming stage: code that writes code, so templates are executed at build time to produce the non-templated C++ code that then gets compiled as usual).

And just like some sort of “static interface” like I proposed would help the compiler tell us the real error, so too does a compile-time contract fix this problem. We need to introduce “constraints” like C#, but since templates are all compile-time, it’s not an ordinary interface/class. It’s something totally different: a concept:

template<typename T> concept Describable = requires(const T& value)

{

{ value.description() } -> std::string

}Then we can use a concept as a constraint:

template<typename T> requires Describable<T> std::string describeArray(T[] array, size_t count)

…But as long as we don’t have such, uh, concepts (heh) in other languages, we can use other techniques to emulate them, like “compilation tests”. The solutions I gave are all a little awkward. Naming two packages identically just to make them clash? Tests that just compile but don’t run? Well, it’s either that, or all the force downcasting (because you know the downcast won’t fail, right? Right?) you’ve had to do whenever you wrote an adapter layer. I know which one I’d rather deal with.

Testing

Now, let’s talk about testing our application. In our E2E tests, we’ll want to link in whatever Library the app is actually using. But in more isolated unit tests, we’ll likely want to mock the Library. If you use some mocking framework like Moq or Mockito, you know that the prerequisite to something being “mockable” is that it’s dynamically dispatched (an interface, abstract or at least virtual method). You can try PowerMock or something similar, but that kind of runtime hacking isn’t always available, depending on the language/environment. The problem is essentially the same. When running for real, we want to call the real library. When running in tests, we want to call a mock library. That’s a variation. Variations are typically solved with polymorphism, and that’s exactly what mocking frameworks do. They take advantage of a polymorphic boundary to redirect your application’s calls to the mocking framework.

How do we handle mocking (or any kind of test double, for that matter) if we’re doing this kind of static compile/link time binding trick?

Interestingly enough, if you think about it, runtime binding is unnecessarily late in most cases here as well. How often do you find yourself sometimes mocking a class and sometimes not? If that class is part of your application, you’ll do that. But an external class? We’ll always mock that. Every time our unit test rig runs, we’ll be mocking Library. So when is the variation selected? At build time! We select it when we decide to build the test rig instead of building the application.

The solution looks the same. We just create another version of the package that has mock classes instead of real ones:

public class LibraryStore

{

LibraryEntity FetchEntity(string Id)

{

// Mocking stuff

}

void ProcessEntity(LibraryEntity Entity)

{

// Mocking stuff

}

}

public class LibraryEntity

{

string Id

{

get

{

// Mocking stuff

}

}

}Unfortunately this would mean we have to reinvent mocking inside our classes. A mocking framework exercises late binding in a more powerful way to allow it to record invocations and inject behavior. We don’t need dynamic dispatch to do that, but we might still want it if we’re not going to rewrite or code-generate the mocking logic. What if we made the “test” version of the library flexible enough to be molded by whatever test framework we want to use?

public class LibraryStore

{

virtual LibraryEntity FetchEntity(string Id)

{

throw new Exception(“Empty stub”);

}

virtual void ProcessEntity(LibraryEntity Entity)

{

throw new Exception(“Empty stub”);

}

}

public class LibraryEntity

{

virtual string Id

{

throw new Exception(“Empty stub”);

}

}The “cleaner” choice would be to make the classes abstract, or even just make them interfaces:

public interface LibraryStore

{

LibraryEntity FetchEntity(string Id);

void ProcessEntity(LibraryEntity Entity);

}

public interface LibraryEntity

{

string Id;

}This will work fine unless we’re calling constructors somewhere, most likely for LibraryStore. In that case, the app won’t compile with this library linked in because it will be trying to construct interface instances. But what if we make it part of the contract that these classes can’t be directly constructed? Instead, they provide factory methods, and their constructors are private? That will grant us the flexibility to swap in abstract versions when we need them.

To add this to our interface definition “tests”, we would need to somehow test that the classes are not directly constructible. Testing negatives for compilation is tricky. You’d have to create a separate compilation unit for each negative case, and have your build system try to compile them and fail if compilation succeeds. Since you have to script that part, you might as well script the whole thing. You can write a “testFailsCompilation” script that takes a source file as an input, runs the compiler from the command line and checks whether it failed. In your project, the test suite would be composed of scripts that call the “testFailsCompilation” script with different source files.

That’s fine, but it probably doesn’t integrate with your IDE as well. There won’t be a convenient list of test cases with play buttons next to each one and a check mark or X box indicating which ones passed/failed in the last test run. Some boilerplate can probably fix that. If you can invoke the scripts from code, then you can write test cases to invoke the script and assert on its output. Where that might cause trouble is embedded programming (mobile development is an example) where tests can or must run on a different device, that may or may not have shell or permission to use it. Really, those tests are for the build stage, so you ought to define your test target to run on your build machine. If you can set that up, even your positive test cases will integrate better. Remember that what I suggested before were “test” methods that are actual [Test] cases. So they won’t show up in the list of tests either. If you split each one into its own source file, compile it with a script, then write [Test] cases that invoke each script, then you recover proper IDE integration. This will make hooking them into a CI/CD pipeline simpler as well.

With that, we have covered all the bases. Add this to your tool belt. If you need variation, you probably want polymorphism. But class hierarchies (including abstract interfaces) is only one specific type of polymorphism, which we can call runtime polymorphism. There are other types as well. What we discussed here is a form of static polymorphism. When you realize you need polymorphism, don’t just jump to the type we’re all trained to think of as the one type. Think about when the variation needs to be selected, and choose the polymorphism that makes decision at that time: no earlier, and no later.