My Language of Choice

“What’s your favorite programming language, Dan?”

“Oh, definitely C++”

…

Am I a masochist? Well, if I am, it’s irrelevant here. Am I just unfamiliar with all those fancy newer “high-level” languages? Nope, I don’t use C++ professionally. On jobs I’m writing Swift, Java, Kotlin, C# or even Ruby and Javascript. C++ is what I write my own apps in.

Am I just insane? Again, if I am, it’s not the reason for my opinion on this matter (at least from my possibly insane perspective).

C++ is an incredibly powerful language. To be fair, it has problems (what Bjarne Stroustrup calls “barnacles”). I consider 3 of them to be major. C++20 fixed 2 of them (the headers problem that makes gratuitous use of templates murder your compile time and forces you to distribute source code, fixed with modules, and the duck typing of templates that makes template error messages unintelligible, fixed with concepts). The remaining one is reflection, which we were supposed to get in C++20, but now it’s been punted to at least C++26.

But overall, I prefer C++ because it is so powerful. Of all the languages I’ve used, I find myself saying the least often in C++ “hmm, I just can’t do what I want to do in this language”. It’s not that I’ve never said that. I just say it less often than I do in other languages.

When this conversation comes up, someone almost always asks me about memory management. It’s not uncommon for people, especially Java/C# guys, to say, “when is C++ going to get a garbage collector?”

C++ had a garbage collector… or, rather, an interface for adding one. It was removed in C++23. Not deprecated, removed. Ripped out in one clean yank.

In my list of problems/limitations of C++, resource management (not memory management, I’ll explain that shortly) is nowhere on the list. C++ absolutely kicks every other language’s ass in this area. There’s another language, D, that follows the design of C++ but frees itself from the shackles of backward compatibility, and is in almost every way far more powerful. Why do I have absolutely no interest in it? Because it has garbage collection. With that one single decision, they ruined what could easily be the best programming language in existence.

I think the problem is a lot of developers who aren’t familiar with C++ assume it’s C with the added ability to stick methods on structs and encapsulate their members. Hence, they think memory management in C++ is the same as in C, and you get stuff like this:

Programmers working in languages without garbage collection (like C and C++) must implement manual memory management in their code.

Even the Wikipedia article for garbage collectors says:

Other languages were designed for use with manual memory management… for example, C and C++

I have a huge C++ codebase, including several generic frameworks, for my own projects. I can count the number of deletes I’ve written on two hands, maybe one.

The Dark Side of Garbage Collection

Before I explain the C++ resource management system, I’m going to explain what’s wrong with garbage collection. Now, “garbage collection” has a few definitions, but I’m talking about the most narrow definition: the “tracer”. It’s the thing Java, C# and D have. Objective-C and Swift don’t have this kind of “garbage collector”, they do reference counting.

I can sum up the problem with garbage collector languages by mentioning a single interface in each of the languages: IDisposable for C#, and Closeable (or Autocloseable) for Java.

The promise garbage collectors give me is that I don’t have to worry about cleaning stuff up anymore. The fact these interfaces exist, and work the way they do, reveals that garbage collectors are dirty liars. We might as well have named the interfaces Deletable, and the method delete.

Then, remember that I told you I can count the number of deletes I’ve written in tens of thousands of lines of C++ on one or two hands. How many of these effective deletes are in a C#/Java codebase?

Even if you don’t use these interfaces, any semantically equivalent “cleanup” call, whether you call it finish, discard, terminate, release, or whatever, counts as a delete. Now tell me, who has fewer of these calls? Java/C# or C++?

C++ wins massively, unless you’re writing C++ code that belongs in the late 90s.

Interestingly, I’ve found most developers assume when I say I don’t like garbage collectors that I’m going to start talking about performance (i.e. tracing is too slow/resource intensive), and it surprises them I say nothing about that and jump straight to these psuedo-delete interfaces. They don’t even know how much better things are in my world.

If you doubt that dispose/close patterns are the worst possible way to deal with resources, allow me to explain how they suffer from all the problems that manual pointers in C suffer from, plus more:

- You have to clean them up, and it’s invisible and innocuous if you don’t

- If you forget to clean them up, the explosion is far away (in space and time) from where the mistake was made

- You have no idea if it’s your responsibility. In C, if you get a pointer from a function, what do you do? Call

freewhen you’re done, or not? Naming conventions? Read docs? What if the answer is different each time you call a function!? - Static analysis is impossible, because a pointer that needs to be freed is syntactically indistinguishable from one that shouldn’t be freed

- You can’t share pointers. Someone has to

freeit, and therefore be designated the sole true owner. - Already

freed pointers are still around, land mines ready to be stepped on and blow your leg off.

Replace “pointer” and free with IDisposable/Closeable and Dispose/close respectively, and everything carries over.

The inability to share these types is a real pain. When the need arises, you have to reinvent a special solution. ADO.NET does this with database connections. When you obtain a connection, which is an IDisposable, internally the framework maintains a count of how many connections to are open. Since you can’t properly share an IDisposable, you instead “open” a new connection every time, but behind the scenes it keeps track of the fact an identical connection is already open, and it just hands you a handle to this open connection.

Connection pooling is purported to solve a different problem of opening and closing identical connections in rapid succession, but the need to do this to begin with is born out of the inability to create a single connection and share it. The cost of this is that the system has to guess when you’re really done with the connection:

If

MinPoolSizeis either not specified in the connection string or is specified as zero, the connections in the pool will be closed after a period of inactivity. However, if the specifiedMinPoolSizeis greater than zero, the connection pool is not destroyed until theAppDomainis unloaded and the process ends.

This is ironic, because the whole point of IDisposable is to recover the deterministic release of scarce resources that is lost by using a GC. By this point, you might as well just hand the database connection to GC, and do the closing in the finalizer… except that’s dangerous (more on this later), and it also loses you any control over release (i.e. you can’t define a “period of inactivity” to be the criterion).

This is just reinvented reference counting, but worse: instead of expressing directly what you’re doing (sharing an expensive object, so that the last user releasing it causes it to be destroyed), you have to hack around the limitation of no sharing and write code that looks like it’s needlessly recreating expensive objects. Each time you need something like this, you have to rebuild it. You can’t write a generic shared resource that implements the reference counting once. People have tried, and it never works (we’ll see why later).

Okay, well hopefully we can restrict our use of these interfaces to just where they’re absolutely needed, right?

IDisposable/Closeable are zombie viruses. When you add one as a member to a class A, it’s not uncommon that the (or at least a) “proper” time to clean up that member is when the A instance is no longer used. So you need to make A an IDisposable/Closeable too. Anything holding an A as a member then likely needs to become an IDisposable/Closeable itself, and on and on. Then you have to write boilerplate, which can usually be generated by your IDE (that’s always a sign of a language defect, that a tool can autogenerate code you need but the compiler can’t), to have your Dispose/close just call Dispose/close on all IDisposable/Closeable members. Except that’s not always correct. Maybe some of those members are just being borrowed. Back to the docs!

Now you’re doing what C++ devs had to do in the 90s: write destructors that do nothing but call delete on all pointer members… except when they shouldn’t.

In fact, IDisposable/Closeable aren’t enough for the common case of hierarchies and member cleanup. A class might also hold handles to “native” objects that need to be cleaned up whenever the instance is destroyed. As I’ll explain in a moment, you can’t safely Dispose/close your member objects in a finalizer, but you can safely clean up native resources (sort of…). So you need two cleanup paths: one that cleans up everything, which is what a call to Dispose/close will do, and one that only does native cleanup, which is what the finalizer will trigger. But then, since the finalizer could get called after someone calls Dispose, you need to make sure you don’t do any of this twice, so you also need to keep track of whether you’ve already done the cleanup.

The result is this monstrosity:

protected virtual void Dispose(bool disposing)

{

if (_disposed)

{

return;

}

if (disposing)

{

// TODO: dispose managed state (managed objects).

}

// TODO: free unmanaged resources (unmanaged objects) and override a finalizer below.

// TODO: set large fields to null.

_disposed = true;

}I mean, come on! The word “Dispose” shows up as an imperative verb, a present participle, and a past participle. It’s a method whose parameter basically means “but sort of not really” (I call these “LOLJK” parameters). Where did I find this demonry? On Microsoft’s docs, as an example of a pattern you should follow, which means you won’t just see this once, but over and over.

Raw C pointers never necessitated anything that ridiculous.

For the love of God keep this out of C++. Keep it as far away as possible.

Now, the real question here isn’t why do we have to go through all this trouble when using IDisposable/Closeable. Those are just interfaces marking a uniform API for utterly manual resource management. We already know manual resource management sucks. The real question is: why can’t the garbage collector handle this? Why don’t we just do our cleanup in finalizers?

Because finalizers are horrible.

They’ve been completely deprecated in Java, and Microsoft is warning people to never write them. The consensus is now that you can’t even safely release native resources there . It’s so easy to get it wrong. Storing multiple native resources in a managed collection? The collection is managed, so you can’t touch it. Did you know finalizers can get called on objects while the scope they’re declared in is still running, which means they can get called mid-execution of one of their methods? And there’s more. Allowing arbitrary code to run during garbage collection can cause all sorts of performance problems or even deadlocks. Take a look at this and this thread.

Is this really “easier” than finding and removing reference cycles?

The prospect of doing cascading cleanup in finalizers fails because of how garbage collectors work. When I’m in a finalizer, I can’t safely assume anything in my instance is still valid except for native/external objects that I know aren’t being touched by the garbage collector. In particular, the basic assumption about a valid object is violated: that its members are valid. They might not be. Finalizers are intrinsically messages sent to half-dead objects.

Why can’t the garbage collector guarantee order? This is, I think, the biggest irony in all of this. The answer is reference cycles. It turns out neglecting to define an ordered topology of your objects causes some real headaches. Garbage collectors just hide this, encourage you to neglect the work of repairing cyclical references, and force you to always deal with the possibility of cycles even when you can prove they don’t exist. If those cyclical references are nothing but bags of bits taken from the memory pool, maybe it will work out okay. Maybe. As soon as you want any kind of well-ordered cleanup logic, you’re hosed.

It doesn’t even make sense to try to apply garbage collectors to non-memory resources like files, sockets, database connections, and so on, especially when you remember some of those resources are owned by entire machines, or even networks, rather than single processes. It turns out that “trigger a sequence to build up a structure, then trigger the exact opposite sequence in reverse order to tear it town” is a highly generic, widely useful paradigm, which we C++ guys call Resource Allocation Is Initialization.

Anything from opening and closing a file, to acquiring and releasing a mutex, to describing and committing an animation, can fall under this paradigm. Any situation you can imagine where there is a balanced “start” and “finish” logic, which is inherently hierarchical: if “starting” X really means to start A, B then C in that order, then “finishing” X will at least include “finishing” C, B then A in that order.

By giving up deterministic “cleanup” of your objects in a language, you’re depriving yourself of this powerful strategy, which goes way beyond the simple case of “deleting X, who was constructed out of A, B and C, means deleting C, B and A”. Deterministically run pairs of hierarchical setup/teardown logic are ubiquitous in software. Memory allocation and freeing is just a narrow example of it.

For this reason, garbage collection definitely is not what you want to have baked into a language, attempting to be the one-size-fits-all resource management strategy. It simply can’t be that, and then since the language-baked resource management is limited in what it can handle, you’re left totally out to dry, reverting to totally manual management, of all other resources. At best, garbage collection is something you can opt into for specific resources. That requires a language capability to tag variables with a specific resource management strategy. Ideally that strategy can be written in the language itself, using its available features, and shipped as a library.

I don’t know any language that could do this, but I know one that comes really close, and does allow “resource management as libraries” for every other management technique beside tracing.

What was my favorite language, again?

The Underlying Problem

I place garbage collection into the same category as ORMs: tools that attempt to hide a problem instead of abstract the problem.

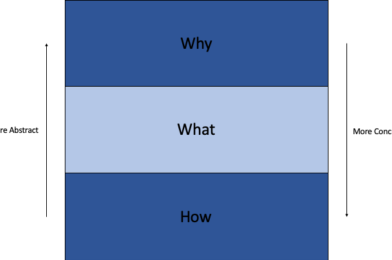

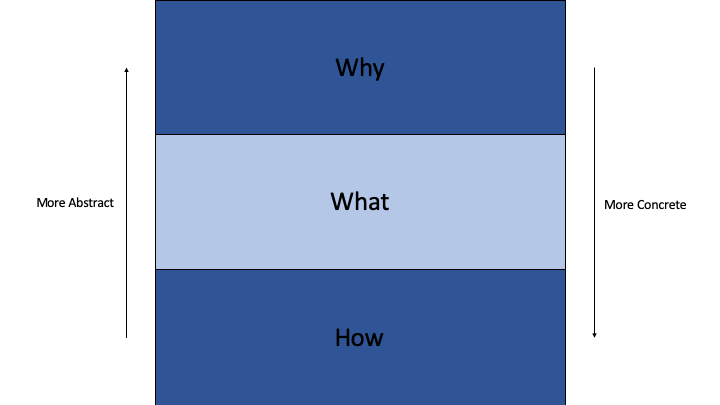

We all agree manual resource management is bad. Why? Because managing resources manually forces us to tell a system how to solve a problem instead of telling it what problem to solve. There’s generally two ways to deal with the tedium of spelling out how. The first is to abstract: understand what exactly you’re telling the system to do, and write a higher level interface to directly express this information that encapsulates the implementation details. The other is to hide: try to completely take over the problem and “automagically” solve it without any guidance at all.

ORMs, especially of the Active Record variety, are an example of the second approach applied to interacting with a database. Instead of relieving you from wrestling with the how of mapping database queries to objects, it promises you can forget that you’re even working with a database. It hides database stuff entirely within classes that “look” and “act” like regular objects. The database interaction is under-the-hood automagic you can’t see, and therefore can’t control.

Garbage collection is the same idea applied to memory management: the memory releases are done totally automagically, and you are promised you can forget a memory management problem even exists.

Of course not really. Beside the fact, as I’ve explained, that it totally can’t manage non-memory reosurces, it also really doesn’t let you forget memory management exists. In my experience with reference counted languages like Swift, the most common source of “leaks” aren’t reference cycles, but simply holding onto references to unneeded stuff for too long. This is especially easy to do if you’re sticking references in a collection, and nothing is ever pruning the collection. That’s not a leak in the strict sense (an object unreachable to the program that can’t be deleted), but it’s a semantic leak with identical consequences. Tracers won’t help you with that.

All of these approaches suffer from the same problems: some percentage, usually fairly high (let’s say 85-90%) of problems are perfectly solved by these automagic engines. The remaining 10-15% are not, and the very nature of automagic systems that hide the problem is that they can’t be controlled or extended (doing so re-exposes the problem they’re attempting to hide). Therefore, nothing can be done to cover that 10-15%, and those problems become exponentially worse than they would have been without a fancy generic engine. You have to hack around the engine to deal with that 10-15%, and the result is more headaches than contending directly with the 85% ever would have caused.

Automagic solutions that hide the problem intrinsically run afoul of the open-closed principle. Any library or tool that violates the open-closed principle will make 85-90% of your problems super easy, and the remaining 10-15% total nightmares.

The absolute worst thing to do with automagic engines is bake them into a language. In one sense, doing so is consistent with the underlying idea: that the automagic solution really is so generic and such a panacea that it really deserves to be an ever-present, and unavoidable all-purpose approach. It also significantly exacerbates the underlying problem: that such “silver bullets” are never actually silver bullets.

I’ve been dunking on garbage collectors, but baking reference counting into a language is the same sort of faulty reasoning: that reference counting is the true silver bullet of resource management. Reference counting at least gives us deterministic release. We don’t have to wrestle with the abominations of IDisposable/Closeable. But the fact you literally can’t create a variable without having to manage an atomic integer is a real problem inside tight loops. As I’ll get into shortly, reference counting is the way to handle shared ownership, but the vast majority of variables in a program aren‘t shared (and the ones that are usually don’t need to be). This causes a proliferation of unnecessary cyclic references and memory leaks.

What is the what, and the how, of resource management? Figuring out exactly what needs to get released, and where, is the how. The what is object lifetimes. In most cases, objects need to stay alive exactly as long as they are accessible to the program. The case of daemons that keep themselves alive can be treated as a separate exception (speaking of which, those are obnoxious in garbage collected languages, you have to stick them into a global variable). For something to be accessible to the program, it needs to be stored in a variable. In object-oriented languages, variables live inside other objects, or inside blocks of code, which are all called recursively by the entry function of a thread.

We can see that the lifetime problem is precisely the problem of defining a directed, non-cyclical graph of ownership. Why can there not be cycles? Not for the narrow reason garbage collectors are designed to address, which is that determining in a very “dumb” manner what is reachable and what is not fails on cycles. Cycles make the order of release undefined. Since teardown logic must occur in the reverse order of setup (at least in general), this makes it impossible to determine what the correct teardown logic is.

The missing abstraction in a language like C is the one that lets us express in our software what this ownership graph is, instead of just imagining it and writing out the implications of it (that this pointer gets freed here, and that one gets freed there).

The Typology of Ownership

We can easily list out the types of ownership relationships that will occur in a program. The simplest one is scope ownership: an object lives, and will only ever live, in a single variable, and therefore its lifetime is equal to the scope of that one variable. The scope of a variable is either the block of code it’s declared in (for “local” variables), or the object it’s a instance member of. The ownership is unique and static: there is one owner, and it doesn’t change.

Both code blocks and objects have cascading ownership, and therefore trigger cascading release. When a block of code ends, that block dies, which causes all objects owned by it to die, which causes all objects owned by those objects to die, and so on. The cascading nature is a consequence of the unique and static nature of the ownership, with the parent-child relationship (i.e. the direction of the graph) clearly defined at the outset.

Slightly more complex than this is when an object’s ownership remains unique at all times (we can guarantee there is only ever one variable that holds an object), but the owner can change, and thereby transfer from one scope to another. Function return values are a basic example. We call this unique ownership. The basic requirement of unique ownership is that only transfers can occur, wherein the original variable must release and no longer be a reference to the object when the transfer occurs.

The next level of complexity is to relax the requirement of uniqueness, by allowing multiple variables to be assigned to the same object. This gives us shared ownership. The basic requirement of shared ownership is that the object becomes unowned, and therefore cleaned up, when the last owner releases it.

That’s it! There’s no more to ownership. The owner either changes or it doesn’t. If it does change, either the number of owners can change or it can’t (all objects start with a single owner, so if it doesn’t change, it stays at 1). There’s no more to say.

However, we have to contend with the limitation of being directed. The graph of variable references is generally not directed. This is why we can’t just make everything shared, and every variable an owner of its assigned object. We get a proliferation of cycles, and that destroys the well-ordered cleanup logic, whether we can trace the graph to find disconnected islands or not.

We need to be able to send messages in both directions. Parents will send messages to their children, but children need to send messages to parents. To do this, a child simply needs a non-owning reference back to its parent. Now, the introduction of non-owning references is what creates this risk of dangling references… a problem guaranteed to not exist if every reference is owning. How can we be sure non-owning references are still valid?

Well, the reason we have to introduce non-owning references is to send messages up the ownership hierarchy, in reverse direction of the graph. When does a child have to worry if its parent is still alive? Well, definitely not in the case of unique ownership. In that case, the fact the child is still alive and able to send messages is already proof the (one, unique) parent is still around. The same applies for more distant ancestors. If an ownership graph is all unique, then a child can safely send a message to a great-grandparent, knowing that there’s no way he could still exist to send messages if any of his unique ancestors were gone.

This is no longer true when objects are shared. A shared object only knows that one of its owners is still alive, so it cannot safely send a message to any particular parent. And thus we have the partner to shared ownership, which is the weak reference: a reference that is non-owning and also can be safely checked before access to see if the object still exists.

This is an important point that does not appear to be well-appreciated: weak references are only necessary in the context of shared ownership. Weak references force the user to contend with the possibility of the object being gone. What should happen then? The most common tactic may be to do nothing, but that’s likely just a case of stuffing problems under the rug (i.e. avoiding crashing when crashing is better than undefined behavior). You have to understand what the correct behavior in both variations (object is still present, and object is absent) when you use weak references.

In summary, we have for ownership:

- Scope Ownership

- Unique Ownership

- Shared Ownership

And for non-owning references:

- “Unsafe” references

- Weak references

What we want is a language where we can tell it what it needs to know about ownership, and let it figure out from that when to release stuff.

Additionally, we want to be able to control both what “creating” and “releasing” a certain object entails. The cascading of scope-owned members is given, and we shouldn’t have to, nor should we be able to, modify this (to do so breaks the definition of scope ownership). We should also be able to add additional custom logic.

Once our language lets us express who’s an owner of what, everything else should be take care of. We should not have to tell the program when to clean stuff up. That should happen purely as a consequence of an object becoming unowned.

The Proper Solution

Let’s think through how we might try to solve this problem in C. A raw C pointer does not provide any information on ownership. An owned C pointer and a borrowed C pointer are exactly the same. There are two possibilities about ownership: the owner is either known at compile-time (really authorship time, which applies to interpreted languages too), or it’s known only at run-time. A basic example is a function that mallocs a pointer and returns it. The returned pointer is clearly an owning pointer. The caller is responsible for freeing it.

Whenever something is known at authorship time, we express it with the type system. If a function returns an int*, it should instead return a type that indicates it’s an owning pointer. Let’s call it owned_int_ptr:

struct owned_int_ptr

{

int* ptr;

};When a function returns an owned_int_ptr, that adds the information that the caller must free it. We can also define an unowned_int_ptr:

struct unowned_int_ptr

{

int* ptr;

};This indicates a pointer should not be freed.

For the case where it’s only known at runtime if a pointer is owned, we can define a dynamic_int_ptr:

struct dynamic_int_ptr

{

int* ptr;

char owning;

};(The owning member is really a bool, but C doesn’t have a bool type, so we use a char where 0 means false and everything else means true.)

If we have one of these, we need to check owning to determine if we need to call free or not.

Now, let’s think about the problems with this approach:

- We’d have to declare these pointer types for every variable type.

- We have to tediously add a

.ptrto every access to the underlying pointer - While this tells us whether we need to call

freebefore tossing a variable, we still have to actually do it, and we can easily forget

For the first problem, a C developer would use macros. Macros are black magic, so we’d really like to find a better solution. Ignoring macros, none three of these problems can really be solved in C. We need to add some stuff to the language to make them properly solvable:

- Templates

- User-overridable

*and->operators - User-defined cleanup code that gets automatically inserted by the compiler whenever a variable goes out of scope

You see where I’m going, don’t you? (Unless you’re that unfamiliar with C++)

With these additions, the C++ solution is:

template<typename T> class auto_ptr

{

public:

auto_ptr(T* ptr) : _ptr(ptr)

{

}

~auto_ptr()

{

delete _ptr;

}

T* operator->() const

{

return _ptr;

}

T& operator*() const

{

return *_ptr;

}

private:

T* _ptr;

}Welcome to C++03 (that’s the C++ released in 2003)!

By returning an auto_ptr, which is an owning pointer, you’ll get a variable that behaves identically to a raw pointer when you dereference or access members via the arrow operator, and that automatically deletes the pointer when the auto_ptr is discarded by the program (when it goes out of scope).

The last part is very significant. There’s something unique to C++ that makes this possible:

C++ has auto memory management!

This is what all those articles that say “C++ only has manual memory management” fail to recognize. C++ does have manual memory management (new and delete), but it also has a type of variable with automatic storage. These are local variables, declared as values (not as pointers), and instance members, also declared as values. This is usually considered equivalent to being stack-allocated, but that’s neither important nor always correct (a heap-allocated object’s members are on the heap, but are automatically deleted when the object is deleted).

The important part is auto variables in C++ are automatically destroyed at the end of the scope in which they are declared. This behavior is inherited from C, which “automatically” cleans up variables at the end of their scope.

But C++ makes a crucial enhancement to this: destructors.

Destructors are user defined code, whatever your heart desires, added to a class A that gets called any time an instance of A is deleted. That includes when an A instance with automatic storage goes out of scope. This means the compiler automatically inserts code when variables go out of scope, and we can control what that code is, as long as we control what types the variables are.

That’s the real garbage collection, and it’s the only garbage collection we actually need. It’s completely deterministic and doesn’t cost a single CPU cycle more than what it takes do the actual releasing, because the instructions are inserted (and can be inlined) at compile-time.

You can’t have destructors in a garbage collected language. Finalizers aren’t destructors, and the pervasive myth that they are (encouraged at least in C# by notating them identically to C++ destructors) has caused endless pain. You can have them in reference counted languages. So far, reference counted languages are on par with C++ (except for performance, nudging those atomic reference counts are expensive). But let’s keep going.

Custom Value Semantics

Why can’t we build our own “shared disposable” as a userland class in C#? Something like this:

class SharedDisposable<T> : IDisposable

{

private class ControlBlock

{

T source;

AtomicInt count;

}

SharedDisposable(IDisposable source)

{

_controlBlock = new()

{

source = source;

count = 1;

}

}

SharedDisposable(SharedDisposable other)

{

_controlBlock = other._controlBlock;

_controlBlock.increment();

}

T get()

{

return _controlBlock.source;

}

void Dispose()

{

if(_controlBlock.decrementAndGet() == 0)

{

_controlBlock.source.Dispose()

}

}

}One problem, of course, is that if the source IDisposable is accessible directly to anyone, they can Dispose it themselves. Sure, but really that problem exists for any “resource manager” class, including smart pointers in C++. The bigger problem is that if I do this:

function StoreSharedDisposable(SharedDisposable<MyClass> Incoming)

{

this._theSharedThing = Incoming;

}The thing that’s supposed to happen, namely incrementing the reference count, doesn’t happen. This is just a reference assignment. None of my code gets executed at the =. What I have to do is write this:

function StoreSharedDisposable(SharedDisposable<MyClass> Incoming)

{

this._theSharedThing = new SharedDisposable(Incoming);

}Like calling Dispose, this is another thing you’ll easily forget to do. We need to be able to require that assigning one SharedDisposable to another invokes the second constructor.

This is where C++ pulls ahead even of reference counted languages, and where it becomes, AFAIK, truly unique (except direct derivatives like D). A C++ dev will look at that second constructor for SharedDisposable and recognize it as a copy constructor. But it doesn’t have the same effect. Like most “modern” languages, C# has reference semantics, so assigning a variable involves no copying whatsoever. C++ has primarily value semantics, unless you specifically opt out with * or &, and unlike the limited value semantics (structs) in C# and Swift, you have total control over what happens on copy.

(If C#/Swift allowed custom copy constructors for structs, it would render the copy-on-write optimization impossible, and since you can only have value semantics, unless you tediously wrap a struct in a class, losing this optimization would mean a whole lot of unnecessary copying).

Speaking of this, there’s a big, big problem with auto_ptr. You can easily copy it. Then what? You have two auto_ptrs to the same pointer. Well, auto_ptrs are owning. You have two owners, but no sharing logic. The result is double delete. This is so severe a problem it screws up simply forwarding an auto_ptr through a function:

auto_ptr<int> forwardPointerThrough()

{

auto_ptr<int> result = getThePointer();

...

return result; // Here, the auto_ptr gets copied. Then result goes out of scope, and its destructor is called, which deletes the underlying pointer. You've now returned an auto_ptr to an already deleted pointer!

}Luckily, C++ lets us take full control over what happens when a value is copied. We can even forbid copying:

template<typename T> class auto_ptr

{

public:

...

auto_ptr(const auto_ptr& other) = delete;

auto_ptr& operator=(const auto_ptr& other) = delete;

...

}We also suppressed copy-assignment, which would be just as bad.

C++ again let’s us define ourselves exactly what happens when we do this:

SomeType t;

SomeType t2 = t; // We can make the compiler insert any code we want here, or forbid us from writing it.This is the interplay of value semantics and user-defined types that let us take total control of how those semantics are implemented.

That helps us avoid the landmine of creating an auto_ptr from another auto_ptr, which means the underlying ptr now has two conflicting owners. Our attempt to pass an auto_ptr up a level in a return value will now cause a compile error. Okay, that’s good, but… I still want to pass the return value through. How can I do this?

I need some way for an auto_ptr to release its control of its _ptr. Well, let’s back up a bit. There’s a problem with auto_ptr already. What if I create an auto_ptr by assigning it to nullptr?

auto_ptr ohCrap = nullptr;When this goes out of scope, it calles delete on a nullptr. auto_ptr needs to check for that case:

~auto_ptr()

{

if(_ptr)

delete _ptr;

}With that fixed, it’s fairly obvious what I need to do to get an auto_ptr to not delete its _ptr when it goes out of scope: set _ptr to nullptr:

T* release() const

{

T* ptr = _ptr;

_ptr = nullptr;

return ptr;

}Then, to transfer ownership from one auto_ptr from another, I can do this:

auto_ptr<int> forwardPointerThrough()

{

auto_ptr<int> result = getThePointer();

...

return result.release();

}Okay, I’m able to forward auto_ptrs, because I’m able to transfer ownership from one auto_ptr to another. But it sucks I have to add .release(). Why can’t this be done automatically? If I’m at the end of a function, and I assign one variable to another, why do I need to copy the variable? I don’t want to copy it, I want to move it.

The same problem exists if I call a function to get a return value, then immediately pass it by value to another function, like this:

doSomethingWithAutoPtr(getTheAutoPtr())What the compiler does (or did) here is assign the result of getTheAutoPtr() to a temporary unnamed variable, then copy it to the incoming parameter into doSomethingWithAutoPtr. Since a copy happens, and we have forbidden copying an auto_ptr, this will not compile. We have to do this:

doSomethingWithAutoPtr(getTheAutoPtr().release())But why is this necessary? The reason to call release is to make sure that we don’t end up with two usable auto_ptrs to the same object, both claiming to be owners. But the second auto_ptr here is a temporary variable, which is never assigned to a named variable, and is therefore unusable to the program except to be passed into doSomethingWithAutoPtr. Shouldn’t the compiler be able to tell that there’s never really two accessible variables? There’s only one, it’s just being transferred around.

This is really a specific example of a much bigger problem. Imagine instead of an auto_ptr, we’re doing this (passing the result of one function to another function) with some gigantic std::vector, which could be megabytes of memory. We’ll end up creating the std::vector in the function, copying it when we return it (maybe the compiler optimizes this with copy elision), and then copying it again into the other function. If the function it was passed to wants to store it, it needs to copy it again. That’s as many as three copies of this giant object when really there only needs to be one. Just as with the auto_ptr, the std::vector shouldn’t be copied, it should be moved.

This was solved with C++11 (released in 2011) with the introduction of move semantics. With the language now able to distinguish copying from moving, the unique_ptr was born:

template<typename T> class unique_ptr

{

public:

unique_ptr(T* ptr) : _ptr(ptr)

{

}

unique_ptr(const unique_ptr& other) = delete; // Forbid copy construction

unique_ptr& operator=(const unique_ptr& other) = delete; // Forbid copy assignment

unique_ptr(unique_ptr&& other) : _ptr(other._ptr) // Move construction

{

other._ptr = nullptr;

}

unique_ptr& operator=(unique_ptr&& other) // Move assignment

{

_ptr = other._ptr;

other._ptr = nullptr;

}

~unique_ptr()

{

if(_ptr)

delete _ptr;

}

T* operator->() const

{

return _ptr;

}

T& operator*() const

{

return *_ptr;

}

T* release()

{

T* ptr = _ptr;

_ptr = nullptr;

return ptr;

}

private:

T* _ptr;

}Using unique_ptr, we no longer need to call release when simply passing it around. We can forward a return value, or pass a returned unique_ptr by value (or rvalue reference) from one function to another, and ownership is transferred automatically via our move constructor.

(We still define release in case we need to manually take over the underlying pointer).

We had to exercise all the capabilities of C++ related to value types, including ones that even reference counted languages don’t have, to build unique_ptr. There’s no way I could build a UniqueReference in Swift, because I can’t control, much less suppress, what happens when one variable is assigned to another. Since I can’t define unique ownership, everything is shared in a reference counted language, and I have to be way more careful about using unsafe references. What most devs do, of course, is make every unsafe reference a weak reference, which forces it to be optional, and make you contend with situations that may never arise and for which no proper action is defined.

C++ comes with scope ownership and unsafe references out of the box, and with unique_ptr we’ve added unique ownership as a library class. To complete the typology, we just add a shared_ptr and the corresponding weak_ptr, and we’re done. Building a correct shared_ptr similarly exercises the capability of custom copy constructors: we don’t suppress copying like we do on a unique_ptr, we define it to increment the reference count. Unlike the C# example, that changes the meaning of thisSharedPtr = thatSharedPtr, instead of requiring us to call something extra.

And with that, the typology is complete. We are able to express every type of ownership by selecting the right type for variables. With that, we have told the system what it needs to know to trigger teardown logic properly.

The vast majority of cleanup logic is cascading. For this reason, not only do we essentially never have to write delete (the only deletes are inside the smart pointer destructors), we also very rarely have to write destructors. We don’t, for example, have to write a destructor that simply deletes the members of a class. We just make those members values, or smart pointers, and the compiler ensures (and won’t let us stop) this cascading cleanup happens.

The only time we need to write a destructor is to tell the compiler how to do the cleanup of some non-memory resource. For example, we can define a database connection class to adapts a C database library to C++:

class DatabaseConnection

{

public:

DatabaseConnection(std::string connectionString) :

_handle(createDbConnection(connectionString.c_string())

{

}

DatabaseConnection(const DatabaseConnection& other) = delete;

~DatabaseConnection()

{

closeDbConnection(_handle);

}

private:

std::unique_ptr<DbConnectionHandle> _handle;

}Then, in any class A that holds a database connection, we simply make the DatabaseConnection a member variable. Its destructor will get called automatically when the A gets destroyed.

We can use RAII to do things like declare a critical section locked by a mutex. First we write a class that represents a mutex acquisition as a C++ class:

class AcquireMutex

{

public:

AcquireMutex(Mutex& mutex) :

_mutex(mutex)

{

_mutex.lock();

}

~AcquireMutex()

{

_mutex.unlock();

}

private:

Mutex& _mutex;

}Then to use it:

void doTheStuff()

{

doSomeStuffThatIsntCritical();

// Critical section

{

AcquireMutex acquire(_theMutex);

doTheCriticalStuff();

}

doSomeMoreStuffThatIsntCritical();

}The mutex is locked at the beginning of the scope by the constructor of AcquireMutex, and automatically unlocked at the end by the destructor of AcquireMutex. This is really useful, because it’s exception safe. If doTheCriticalStuff() throws an exception, the mutex still needs to be unlocked. Manually writing unlock after doTheCriticalStuff() will result in it never getting unlocked if doTheCriticalStuff() throws. But since C++ guarantees that when an exception is thrown and caught, all scopes between the throw and catch are properly unwound, with all local variables being properly cleaned up (including their destructors getting called… this is why throwing exceptions in destructors is a big no-no), doing the unlock in a destructor behaves correctly even in this case.

This whole paradigm is totally unavailable in garbage collected languages, because they don’t have destructors. You can do this in reference counted languages, but at the cost of making everything shared, which is much harder to reason correctly about than unique ownership, and the vast majority of objects are really uniquely owned. In C# this code would have to be written like this:

void DoTheStuff()

{

DoSomeStuffThatIsntCritical();

_theMutex.Lock();

try

{

doTheCriticalStuff();

}

catch(_)

{

throw;

}

finally

{

_theMutex.Unlock()

}

doSomeMoreStuffThatIsntCritical();

}Microsoft’s docs on try-catch-finally show a finally block being used for precisely the purpose of ensuring a resource is properly cleaned up.

In fact, this isn’t fully safe, because finally might not get called. To be absolutely sure the mutex is unlocked we’d have to do this:

void DoTheStuff()

{

DoSomeStuffThatIsntCritical();

_theMutex.Lock();

Exception? error = null;

try

{

doTheCriticalStuff();

}

catch(Exception e)

{

error = e;

}

finally

{

_theMutex.Unlock()

}

if(error)

throw error;

doSomeMoreStuffThatIsntCritical();

}Gross.

C# and Java created using/try-with-resources to mitigate this problem:

void DoTheStuff()

{

DoSomeStuffThatIsntCritical();

_theMutex.Lock();

Exception? error = null;

using(var acquire = new AcquireMutex(_mutex))

{

doTheCriticalStuff();

}

doSomeMoreStuffThatIsntCritical();

}That solves the problem for relatively simple cases like this where a resource doesn’t cross scopes. But if you want to do something like open a file, call some methods that might throw, then pass the file stream to a method that will hold onto it for some amount of time (maybe kicking off an async task), using won’t help you because that assumes the file needs to get closed locally.

Adding using/try-with-resources was a great decision, and it’s not the garbage collector and receives no assistance from the garbage collector at all. They are special language features with new keywords. They never could have been added as library features. And they only simulate scope ownership, not unique or shared ownership. Adding them is an admission that the garbage collector isn’t the panacea it promised to be.

Tracing?

The basic idea here is not to bake a specific resource management strategy into the language, but to allow the coder to opt each variable into a specific resource management strategy. We’ve seen that C++ gives us the tools necessary to add reference counting, a strategy sometimes baked directly into languages, as an opt-in library feature. That begs the question: could we do this for tracing as well? Could we build some sort of traced_ptr<T> that will be deleted by a separate process running in separate thread, that works by tracing the graph of references and determines what is still accessible?

C++ is still missing the crucial capability we need to implement this. The tracer needs to be able to tell what things inside an object are pointers that need to be traced. In order to do that, it needs do be able to collect information about a type, namely what its members are, and their layout, so it can figure out which members are pointers. Well, that’s reflection. Once we get it, it will be part of the template system, and we could actually write a tracer where much of the logic that normally would happen at runtime would be worked out at compile time. The trace_references<T>(traced_ptr<T>& ptr) function would be largely generated at compile time for any T for which a traced_ptr<T> is used somewhere in our program. The runtime logic would not have to work out where the pointers to trace are, it would just have to actually trace them.

Once we get reflection, we can write a traced_ptr<T> class that knows whether or not T has any traced_ptr type members. The destructor of traced_ptr itself will do nothing. The tracer will periodically follow any such members, repeat the step for each of those, and voila: you get opt-in tracing. This is interesting because it greatly mitigates the problem that baked in tracing has, which is the total uncertainty about the state of an object during destruction. What can you do in the destructor for your class if you have traced_ptrs to it? Well, you can be sure everything except the traced_ptr members are still valid. You just can’t touch the traced_ptr members.

Since it is now your responsibility, and decision, to work out which members of a class will be owned by the tracer, you can draw whatever dividing line you want between deterministic and nondeterministic release. A class that holds both a file handle and other complex objects might decide that the complex objects will be traced_ptrs, but the file handle will be a unique_ptr. That way we don’t have to write a destructor at all, and the destructor the compiler writes for us will delete the file handle, and not touch the complex objects.

There may be problems with delegating only part of your allocations to a tracer. The other key part of a tracer is it keeps track of available memory. To make this work you’d probably also need to provide overrides of operator new and operator delete. But you may also be okay with relaxing the promises of traced references: instead of the tracer doing nothing until it “needs to” (when memory reaches a critical usage threshold), it just runs continuously in the background, giving you assurance you can build some temporary webs of objects that you know aren’t anywhere close to your full memory allotment, and be sure they’ll all be swept away soon after you’re done with them.

While this is a neat idea, I would consider it an even lazier approach to the ownership problem than a proliferation of shared and weak references. This is also more or less avoiding the ownership problem altogether. It may be a neat tool to have in our toolbelts, but I’d probably want a linter to warn on every usage just like with weak_ptrs, to make us think carefully about whether we can be bothered to work out actual ownership.

Conclusion

I have gone over all the options in C++ for deciding how a variable is owned. They are:

- Scope ownership: auto variables with value semantics

- Unique ownership:

unique_ptr - Shared ownership:

shared_ptr - Manual ownership:

newanddelete

Then there are two options for how to borrow a variable:

- Raw (unowned) pointers/references

- Weak references:

weak_ptr

This list is in deliberate order. You should prefer the first option, and only go to the next option if that can’t work, and so on. These options really cover all the possibilities of ownership. Neither garbage collected nor reference counted languages give you all the options. Really, the first two are the most important. Resource management is far simpler when you can make the vast majority of cases scope or unique ownership. Unique ownership (that can cross scope boundaries) is, no pun intended, unique to C++.

For this reason, I have far fewer resource leaks in C++ than in other languages, far less boilerplate code to write, and the vast majority of dangling references I’ve encountered were caused by inappropriate use of shared ownership, due to me coming from reference counted languages and thinking in terms of sharing everything. Almost all my desires to use weak references were me being lazy and not fixing cyclical references (it’s not some subtle realization after profiling that they exist, it’s obvious when I write the code they are cyclical).

I wouldn’t add a garbage collector to that code if you paid me.